-

[Deep Learning] Neural Network for predicting XOR operations in PythonDeep-Learning/[Vision] 실습 2019. 4. 9. 05:43

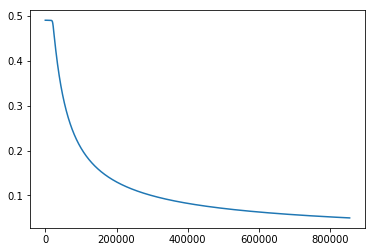

문제 : Sigmoid 활성화 기능으로 NN을 교육하고 NN 기반 XOR logic의 출력과 함께 학습 곡선을 보여준다.

조건 : 파이썬 프로그래밍으로 2 input units * 2 hidden units * 1 output unit의 형태의 인공신경망을 설계한다.

* 설계 방식

1. NNs class design

2. 훈련 시 에러값이 줄어드는 모습을 plot()

3. 모델이 완성되면 test 실시

※ XOR logic은 NNs로 학습시킬 때 2개의 hidden node로는 학습이 너무 오래걸리며, 적절한 learning rate 값을 찾기 어려우므로 3개 이상의 hidden node를 만들 것을 추천

※ 목표를 해결하기 위해 random number를 고정시키기 위해 seed(2019)를 사용하였으며, 다음 seed 값으로 random number를 고정시켰을 때, 적당한 learning weight 값은 약 0.015~0.185 사이였음.(그 이상으로 가면 local minima에 빠지긴하지만, iteration이 많으면 벗어나긴함)

※ 직접 계산을 해보면 알겠지만, 2개의 hidden node만으로 학습을 시키게 되면, local minima가 쉽게 빠지게 된다. 이를 해결하기 위해 적은 learning weight 값으로 local minima에 빠지지 않도록 weight에 아주 작은 변화량만 주며 학습을 시킨 모델이므로, iteration이 굉장히 많다.

소스코드

import numpy as np

from matplotlib import pyplot as plt

from scipy.stats import truncnorm

np.random.seed(2019)

def sigmoid(x) :

return 1 / (1 + np.e ** -x)

activation_function = sigmoid

def truncated_normal(mean=0, sd=1, low=0, upp=10):

return truncnorm(

(low - mean) / sd, (upp - mean) / sd, loc=mean, scale=sd)

errors=[]

Input_Data= np.array([[0.99, 0.99], [0.99, 0.01], [0.01, 0.99], [0.01, 0.01]])

Output_Data = np.array([[0.01],[0.99], [0.99], [0.01]])

class NeuralNetwork:

def __init__(self,

no_of_in_nodes,

no_of_out_nodes,

no_of_hidden_nodes,

learning_rate):

self.no_of_in_nodes = no_of_in_nodes

self.no_of_out_nodes = no_of_out_nodes

self.no_of_hidden_nodes = no_of_hidden_nodes

self.learning_rate = learning_rate

self.create_weight_matrices()

def create_weight_matrices(self):

""" A method to initialize the weight matrices of the neural network"""

rad = 1 / np.sqrt(self.no_of_in_nodes)

X = truncated_normal(mean=0, sd=1, low=-rad, upp=rad)

self.weights_in_hidden = X.rvs((self.no_of_hidden_nodes,

self.no_of_in_nodes))

rad = 1 / np.sqrt(self.no_of_hidden_nodes)

X = truncated_normal(mean=0, sd=1, low=-rad, upp=rad)

self.weights_hidden_out = X.rvs((self.no_of_out_nodes,

self.no_of_hidden_nodes))

def train(self, input_vector, target_vector):

# input_vector and target_vector can be tuple, list or ndarray

input_vector = np.array(input_vector, ndmin=2).T

target_vector = np.array(target_vector, ndmin=1).T

#hidden layer1

output_vector1 = np.dot(self.weights_in_hidden, input_vector)

output_vector_hidden = activation_function(output_vector1)

#hidden layer2

output_vector2 = np.dot(self.weights_hidden_out, output_vector_hidden)

output_vector_network = activation_function(output_vector2)

#errors

output_errors = target_vector - output_vector_network

errors.append(np.mean(abs(output_errors)))

# update the weights:

tmp = output_errors * output_vector_network * (1.0 - output_vector_network)

tmp = self.learning_rate * np.dot(tmp, output_vector_hidden.T)

self.weights_hidden_out += tmp

# calculate hidden errors:

hidden_errors = np.dot(self.weights_hidden_out.T, output_errors * output_vector_network * (1.0 - output_vector_network))

# update the weights:

tmp = hidden_errors * output_vector_hidden * (1.0 - output_vector_hidden)

self.weights_in_hidden += self.learning_rate * np.dot(tmp, input_vector.T)

def run(self, input_vector):

# input_vector can be tuple, list or ndarray

input_vector = np.array(input_vector, ndmin=2).T

output_vector = np.dot(self.weights_in_hidden, input_vector)

output_vector = activation_function(output_vector)

output_vector = np.dot(self.weights_hidden_out, output_vector)

output_vector = activation_function(output_vector)

return output_vector

simple_network = NeuralNetwork(no_of_in_nodes=2,

no_of_out_nodes=1,

no_of_hidden_nodes=2,

learning_rate=0.18)

i=0

while True :

i+=1

traning_cls=simple_network.train(Input_Data, Output_Data)

if(i%1000==0) : print("error of ",i,"th iteration is ", errors[i-1])

if(errors[i-1] < 0.05) : break;

plt.plot(errors)

np.random.shuffle(Input_Data)

cls1 = simple_network.run(Input_Data)

for i in range(len(Input_Data)) :

print(int(round(Input_Data[i][0]))," XOR ",int(round(Input_Data[i][1])),"=",int(round(cls1[0][i], 0)))결과

error of 1000 th iteration is 0.4900505943890506

error of 2000 th iteration is 0.49003787025401413

error of 3000 th iteration is 0.49002842533942387

error of 4000 th iteration is 0.4900210896865711

error of 5000 th iteration is 0.49001505110264026

error of 6000 th iteration is 0.49000974435099676

error of 7000 th iteration is 0.4900047296310077

error of 8000 th iteration is 0.48999960512612345

error of 9000 th iteration is 0.4899939308591982

...생략erro of 848000 th iteration is 0.05024408880132902

error of 849000 th iteration is 0.050205018685919874

error of 850000 th iteration is 0.050166023821701385

error of 851000 th iteration is 0.05012710397375888

error of 852000 th iteration is 0.05008825890818987

error of 853000 th iteration is 0.05004948839210268

error of 854000 th iteration is 0.05001079219360624

0 XOR 1 = 1

1 XOR 0 = 1

0 XOR 0 = 0

1 XOR 1 = 0

※ github link : https://github.com/SeongkukCHO/PCOY/blob/master/NNs/XOR.py'Deep-Learning > [Vision] 실습' 카테고리의 다른 글

[Mask R-CNN] Balloon.py 트레이닝(Window10) (1) 2020.02.24 [Mask R-CNN] Segmentating DeepFashion2 (2) 2020.02.11